Calibrations Tool: Performance Reviews

15FIVE · 2021

Full in-depth case study available upon request

Want to reach out?

hi@jameskibinpark.com

Context

15Five is a human-centered continuous performance management platform backed by positive psychology that enables manager effectiveness. Our 360 Performance Review product aims to create highly engaged and high performing organizations.

I led the end-to-end product design process on the Performance review squad to design a Calibrations tool. Our goal was to design fair and objective performance reviews aligned with the latest positive psychology.

Platform offerings

360 Performance Reviews

OKRs

Engagement Surveys

Weekly Check-ins

Employee Recognition

1-on-1’s

Career Planning

3 months from discovery to delivery

Team

1 Product Manager

1 Product Designer

1 FE Engineer

2 BE Engineers

1 Engineering Manager

1 Science Advisor

We design for…

Mangers

Advocate for their reports, write reviews, and share review results

Participants

Write self reviews and seek transparency in the calibration process

HR Admins & Executives

Host calibration sessions, customizable privacy & permissions, export data insights, and deal with any unsubmitted reviews

What are Calibrations?

15Five believes that humans are unreliable raters of other humans. Calibrations is a process (typically a meeting) unique to each organization used to reduce manager bias during the performance review and make talent decisions for the organization.

Biased Calibrations

Employee marks depend on manager’s influence

Managers rely on pre-existing impressions

Defer to opinions of higher level leaders

Unbiased Calibrations

Reference fair performance data: Competencies, OKRs, & Manager opinion

Use peer reviews to inform manager mark

Involve the participant’s voice

Problem

Calibrations were not reducing manager bias

Customers weren’t referencing performance data during calibrations

Customers don’t know how to run calibrations

Customers wanted to track career data over time

15Five had a less competitive feature set

Design Opportunities & Goals

For our customers…

Reduce manager bias

Enable calibrations directly in 15Five

Align teams on promotion and compensation decisions

For 15Five…

Improve the review cycle UX

Enable a larger sales funnel

Design Process

Information architecture & user journey

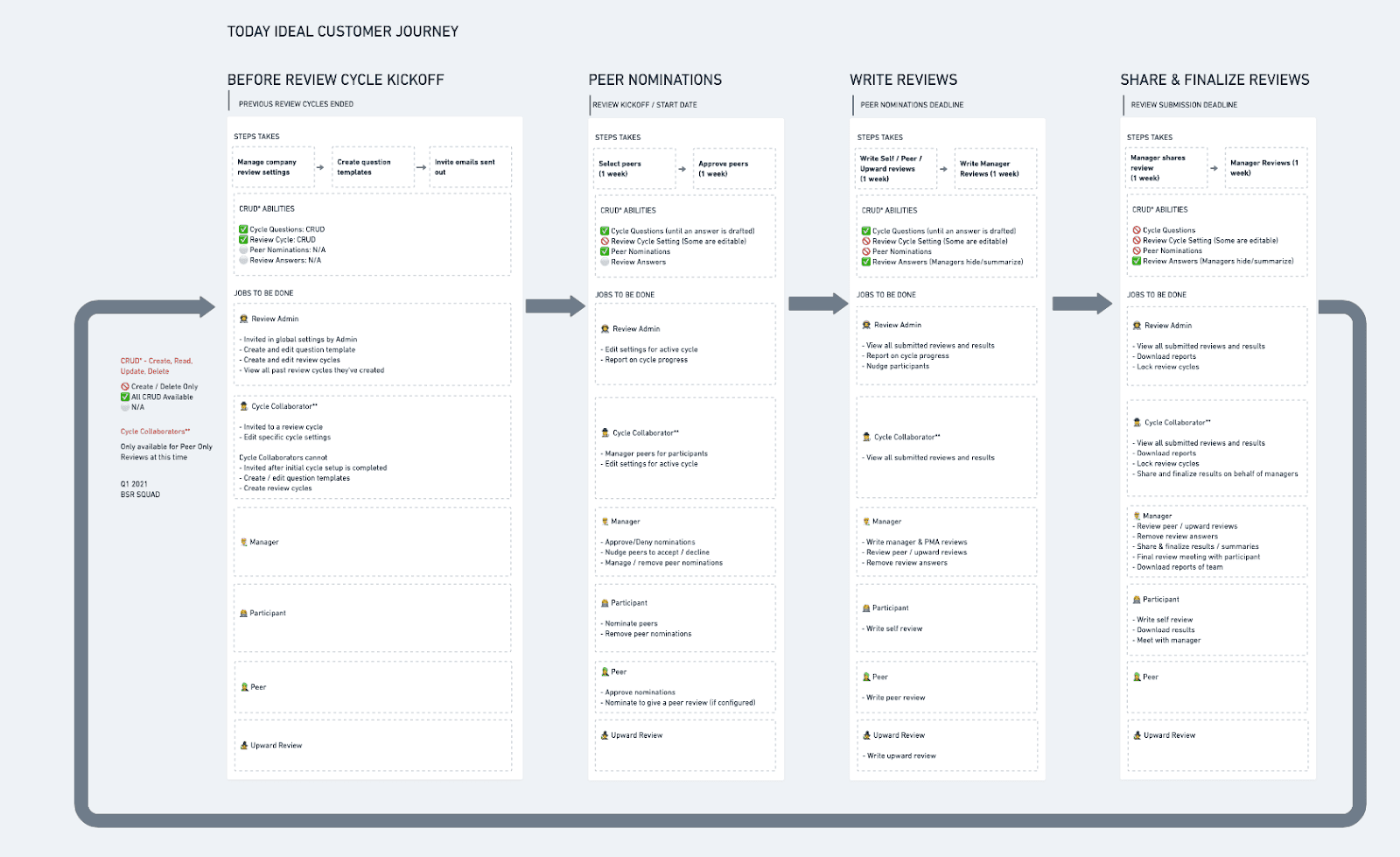

I started by understanding the review cycle IA as it existed today.

Review Cycle User Journey: Existing

Managers were conflating the share & finalize step of the review cycle process, causing premature sharing of reviews. I created a user-flow to curb confusion of internal teams around existing functionality of 360 Review Cycles.

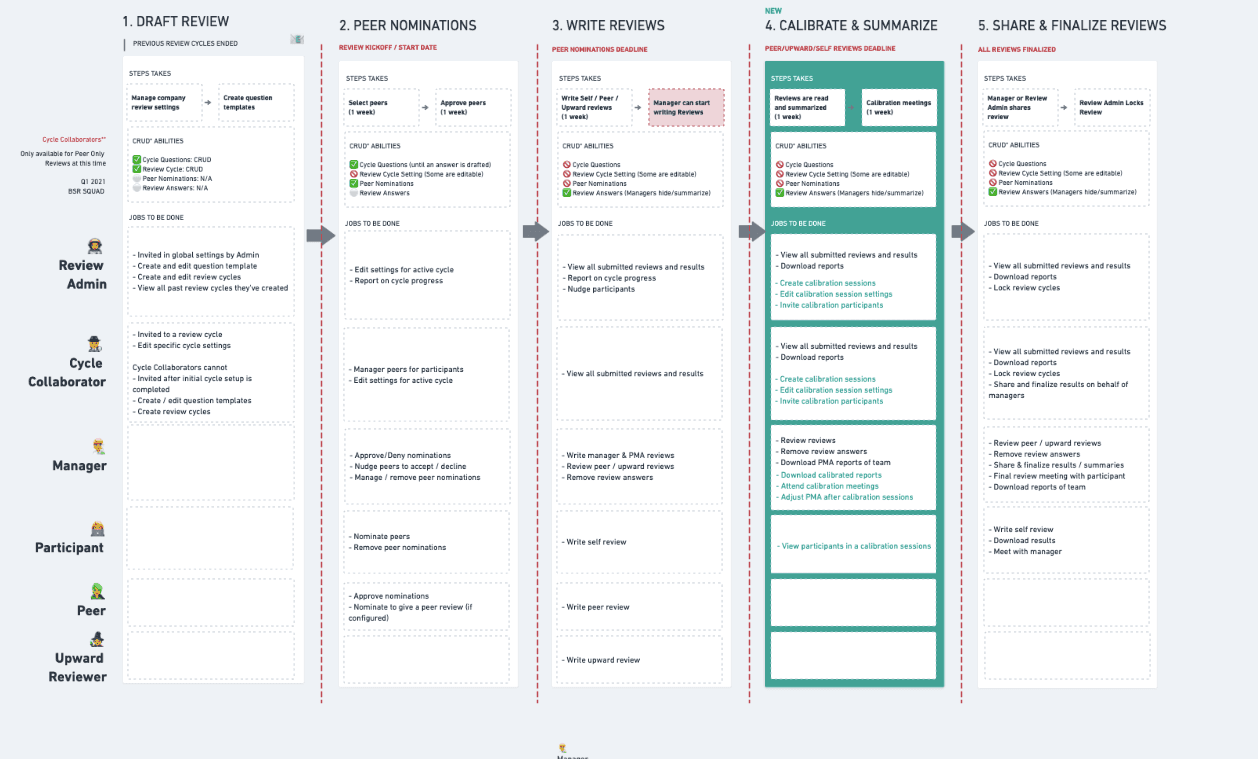

Review Cycle User Journey: Future

We prioritized a few UX improvements based on this customer journey before adding complexity of a new Calibrations step. This user-flow helped understand how Calibrations would fit into 360 Review Cycles.

Review Cycle User Journey: Existing

Review Cycle User Journey: Future

Customer Interviews

After facilitating a cross-functional stakeholder workshop to diverge and converge on our ideas for a calibrations feature, I began wireframing these concepts taken from the workshop to put in front of customers.

Concept 1

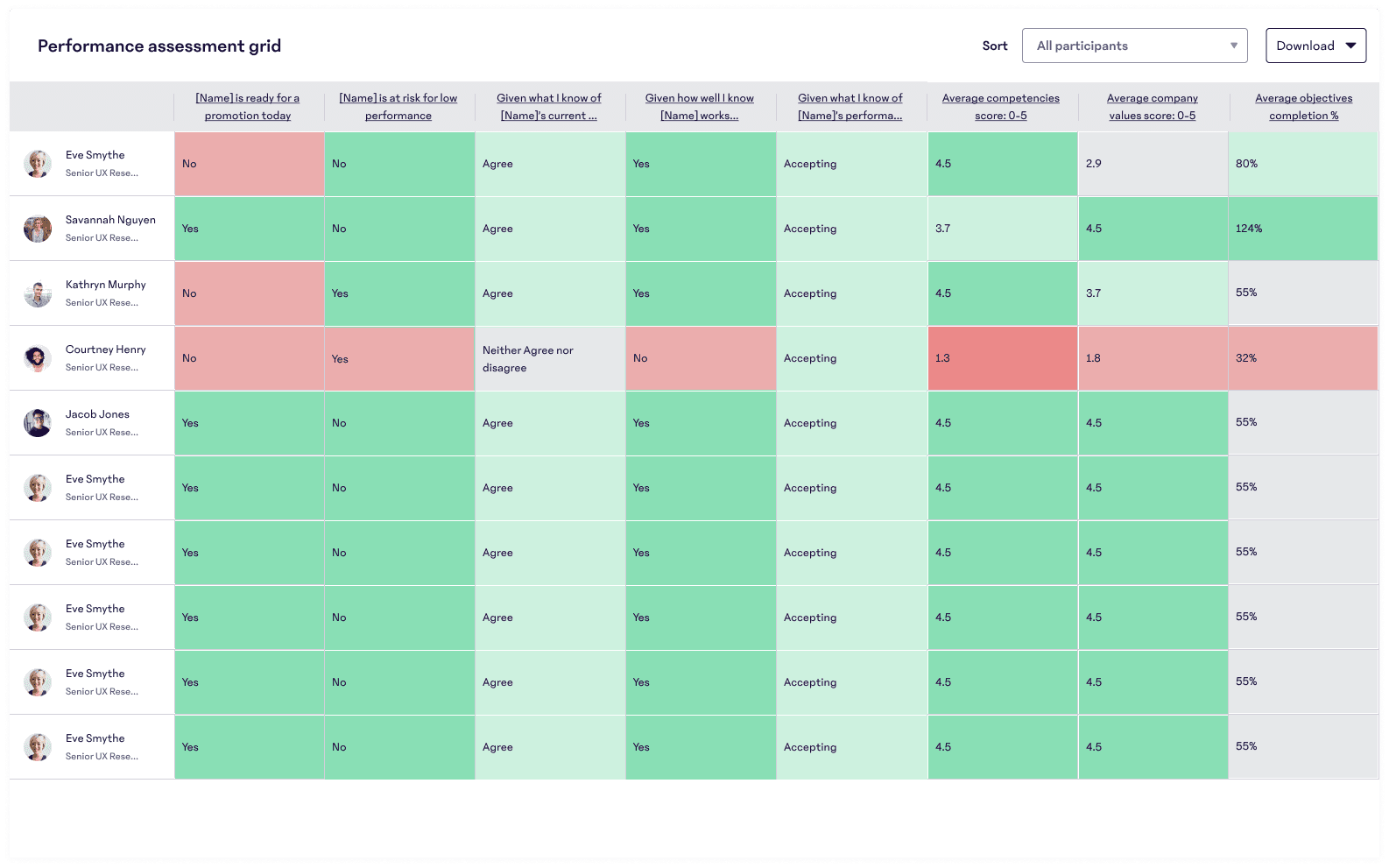

One concept explored the possibility of admins calibrating manager opinion questions in a grid view, for easy read and edit access.

·Participant data in a grid

·Update via grid or CSV upload

·Activity feed for documentation

·Accessible resources panel

Concept 2

The 2nd concept I explored built off of the Talent Matrix feature (15Five’s version of a 9-Box). Our team believed this concept would test favorably given how enthusiastic our PeopleOps team was about the matrix tool.

Participant data in a talent matrix

Update via drag-and-drop

Doc-style shared notes

Table of calibration movement

Research insights

Which concept did customers prefer? 5 / 7 participants gravitated towards concept 1 over the latter according to a Kano Model question I asked during the interview. Users found this concept easier to understand, more informative and more straight forward. Jessie a PeopleOps Director, meets with managers to talk line-by-line participant’s performance who are at the ends of a distribution curve and mentioned the 1st concept aggregates this data in a table and would streamline her process.

We also discovered why managers were running calibration sessions:

For greater manager alignment

To visualize a distribution

To discuss high and low performers

Calibrate more than the manager assessment

In addition, customers wanted to calibrate more than the manager assessment. I iterated on designs to include Competencies, Company Values, and Objectives in the grid. Ultimately this became out-of-scope for our MVP.

Layer data from HRIS systems

How might we run fair and objective reviews and not marginalize BIPOC employees? Demographics data such as tenure, gender, etc. were factors HR leaders wanted to understand in reference to calibration sessions. This finding was not addressed in our MVP as it required more research into legal and privacy implications.

Decide the right performance rating methodology

HR leaders struggled with the idea of using a number rating system to evaluate employees, but did not know an alternative to running their calibration sessions. Participants were curious about the positive psychology methodology that 15Five brought into calibrations.

Calibrating competencies, OKRs, and manager reviews

Wireframing & feedback process

After talking with customers, I began wireframing out the significant flows in the experience. In order to get to an MVP state, I used feedback from conversations with PM’s / Tech Leads, stakeholders reviews, and design critiques.

1) Review cycle creation

2) Calibration sessions

3) Data reporting

4) Sharing and finalizing reviews

Wireframing main flows in Figma

Calibration MVP

Before the session

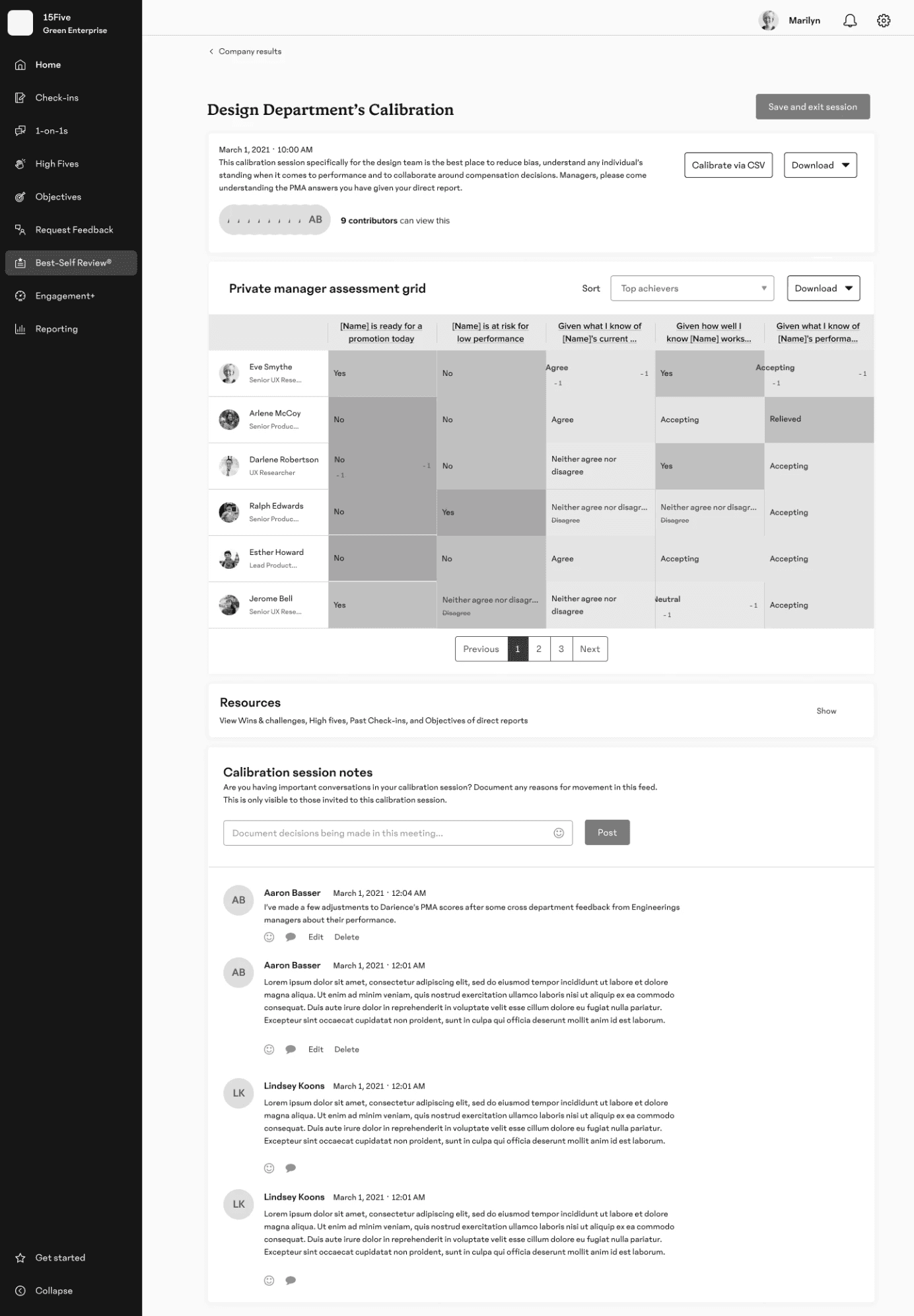

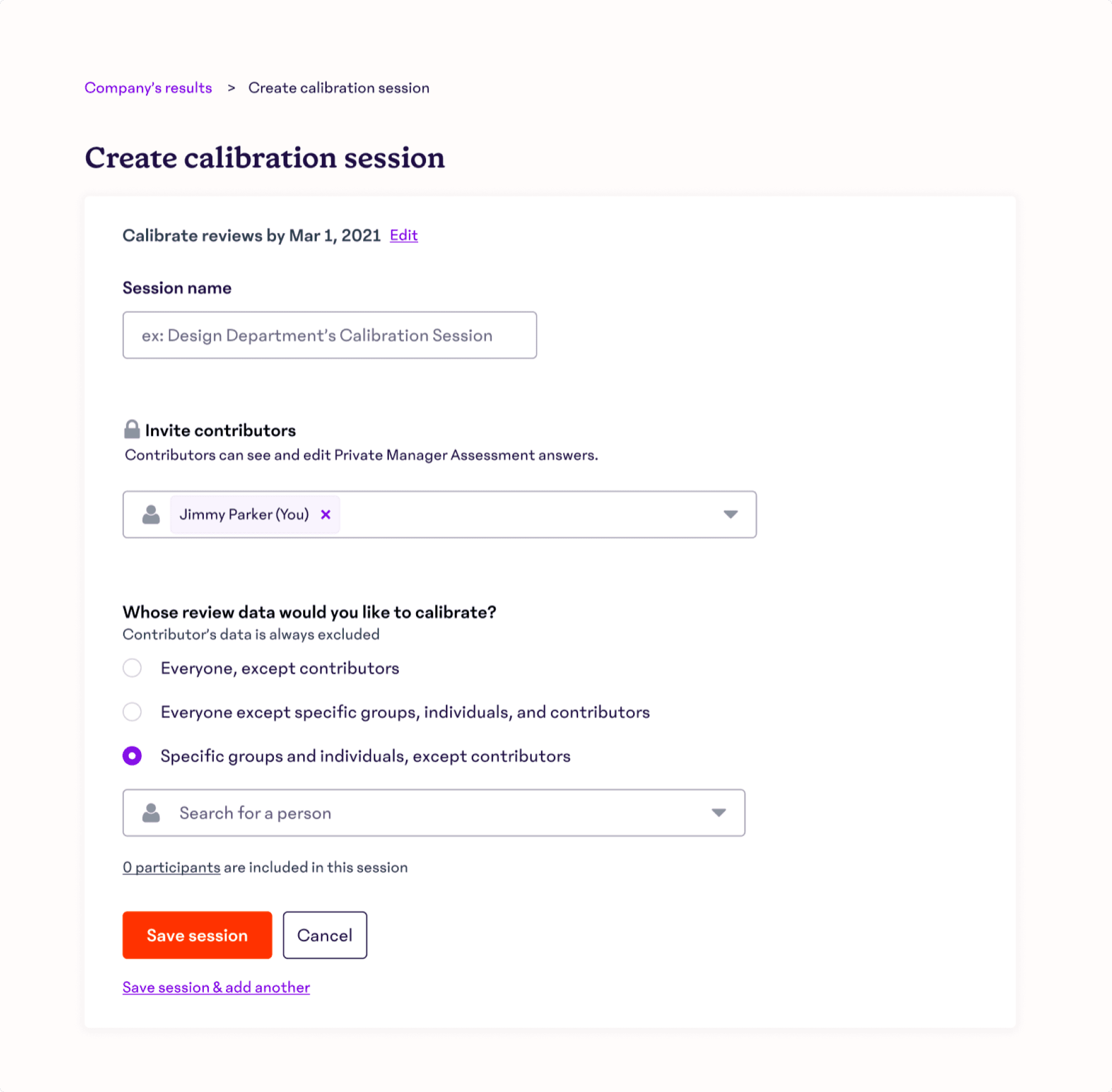

The calibration product we launched allowed an HR admin to create sessions in a flexible way to fit their organization's needs. The creation form needed to be simple, but provide flexibility based on RBAC permissions. “Contributors” could be a manager or an external HR business partner. Calibration groups needed to always exclude contributors in order to uphold privacy standards. Designing for privacy and permissions was particularly challenging because of the variety company sizes we design for. SMB and enterprise leaders needed to create calibration sessions quickly and easily, giving access to the exact people they needed to.

Calibration creation page

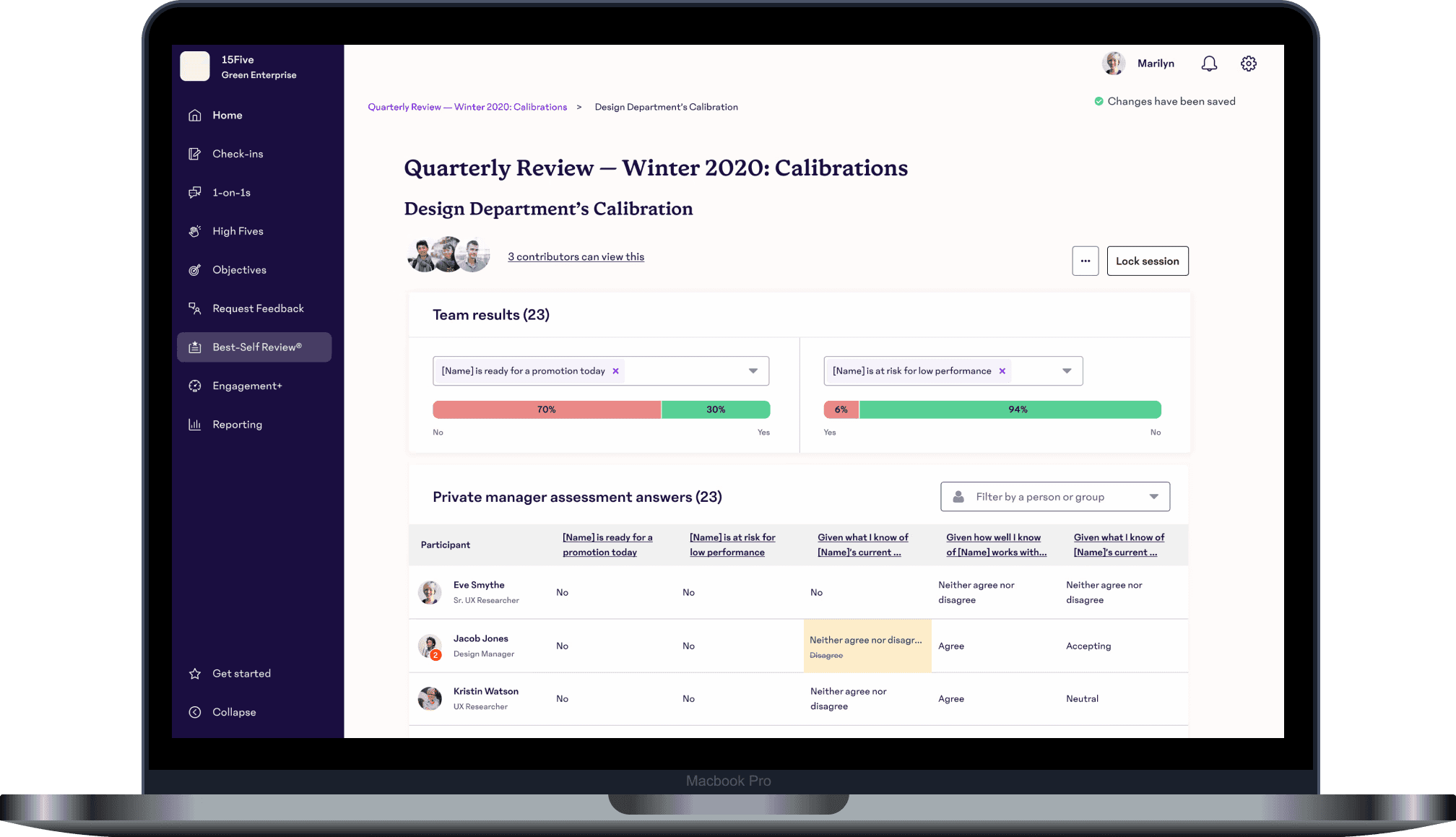

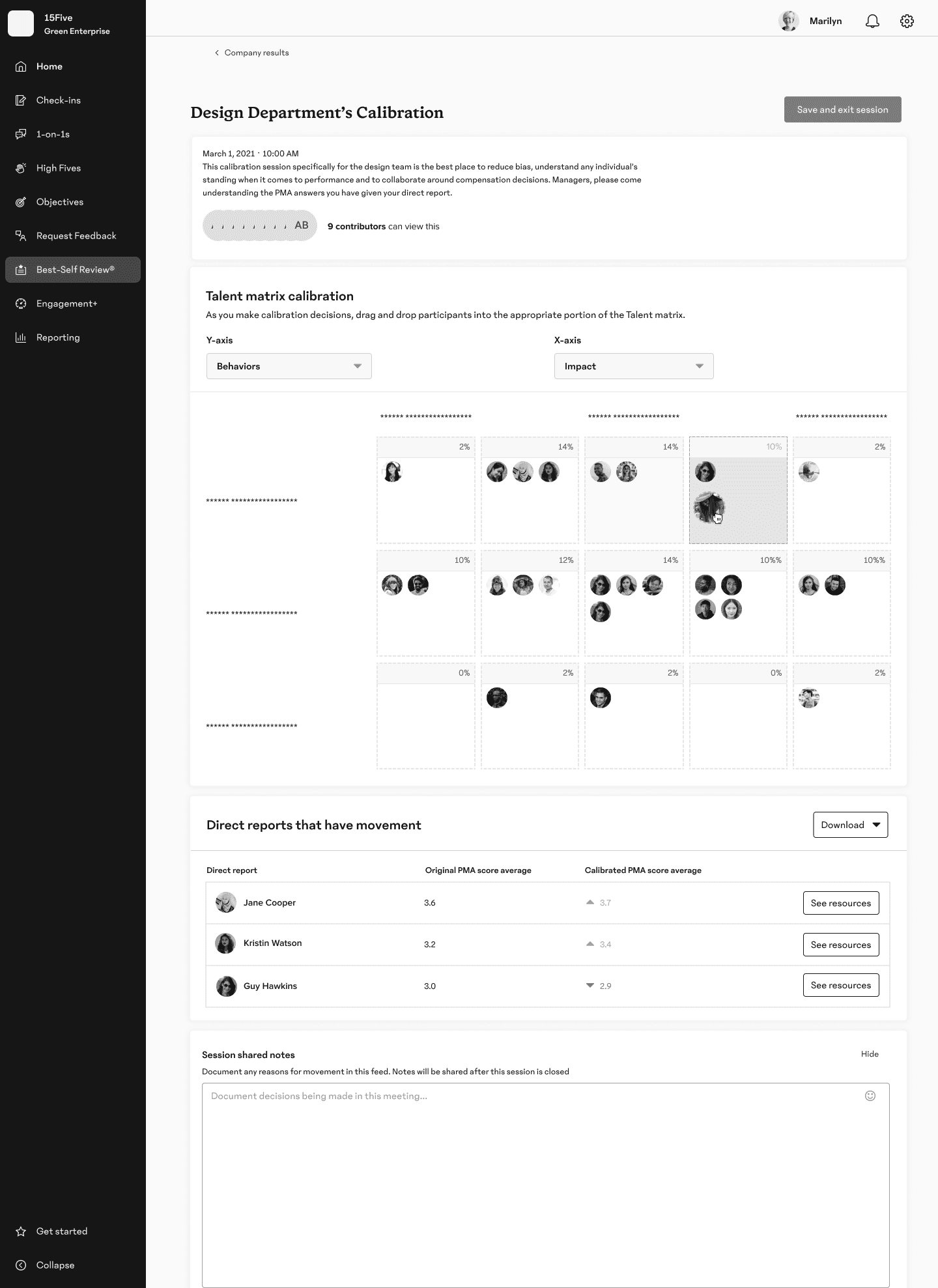

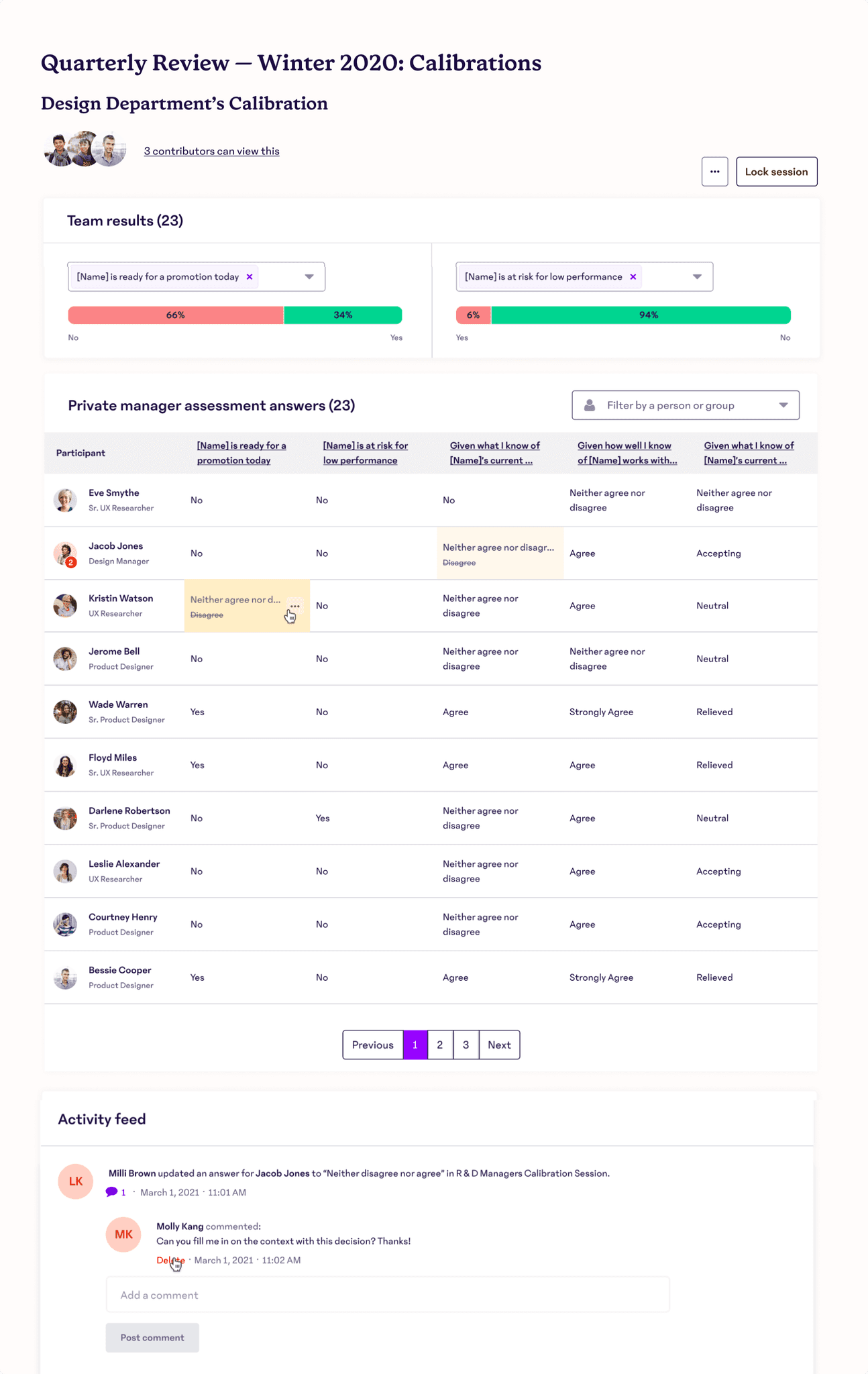

During the session

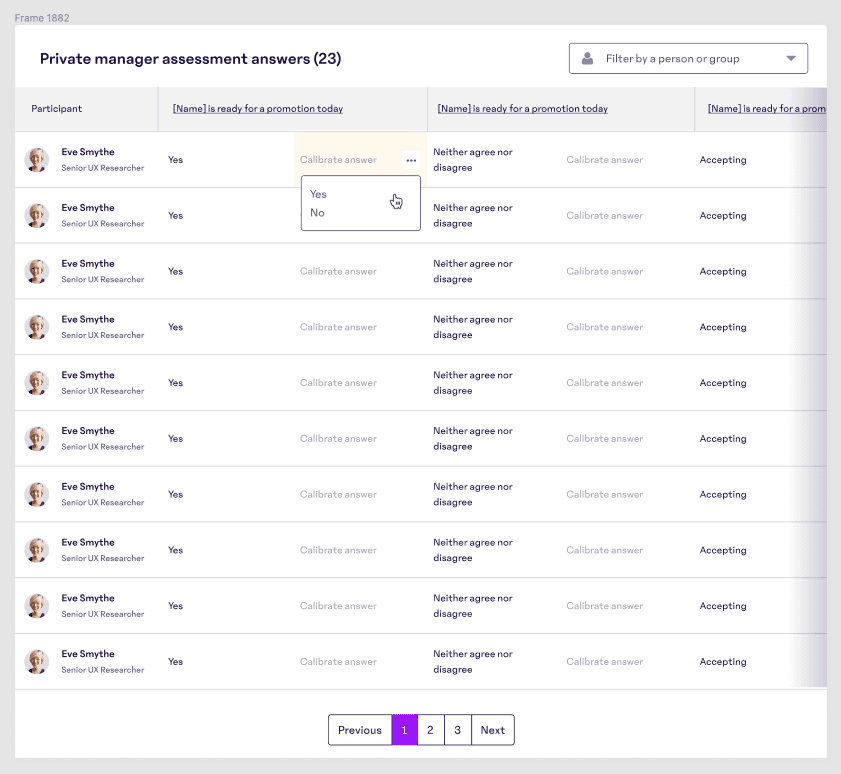

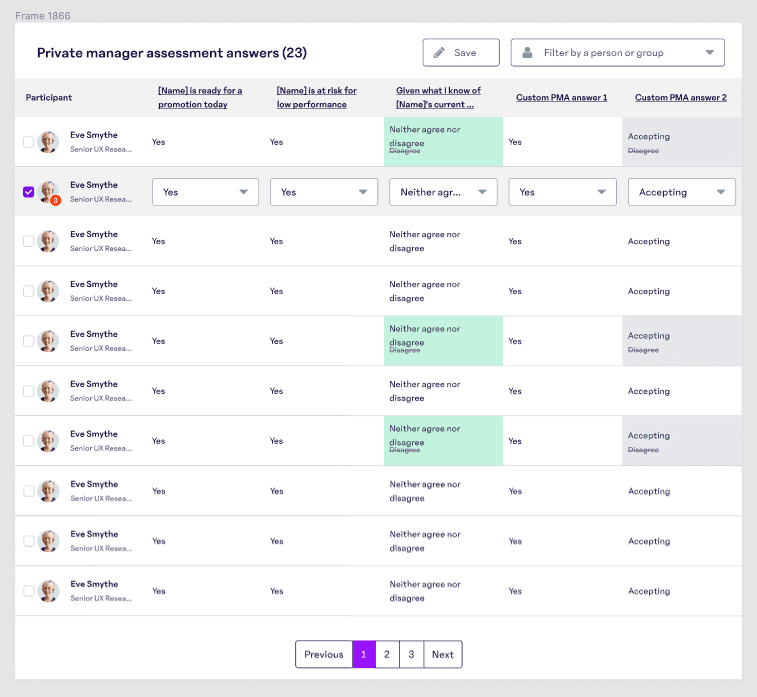

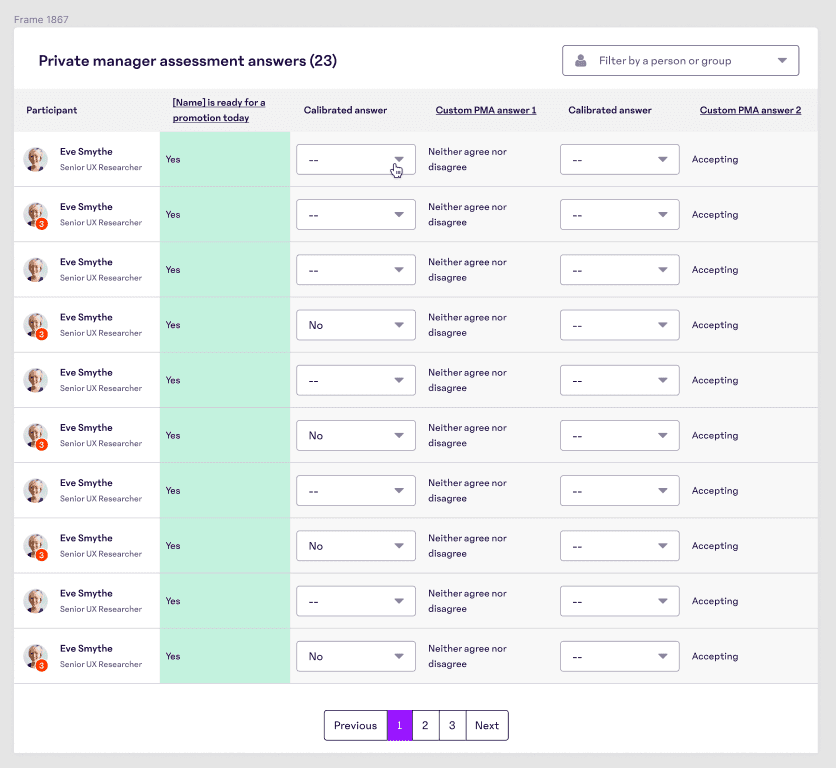

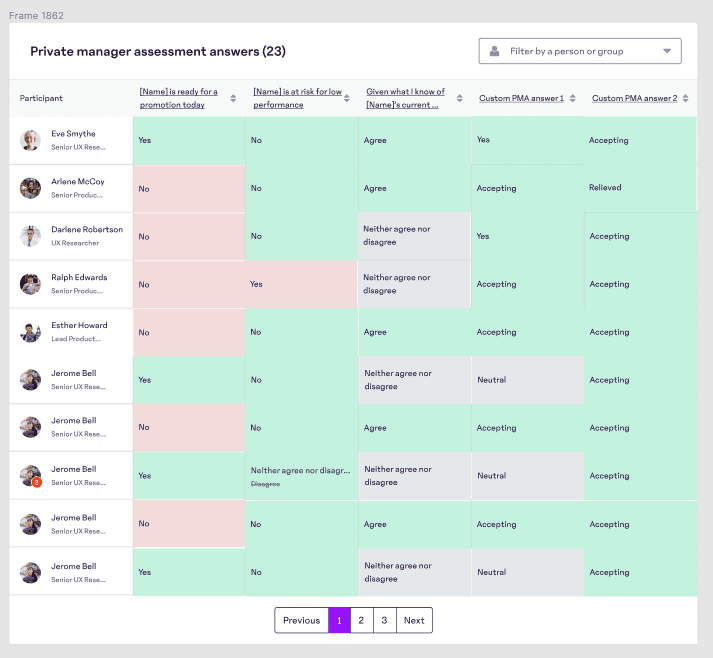

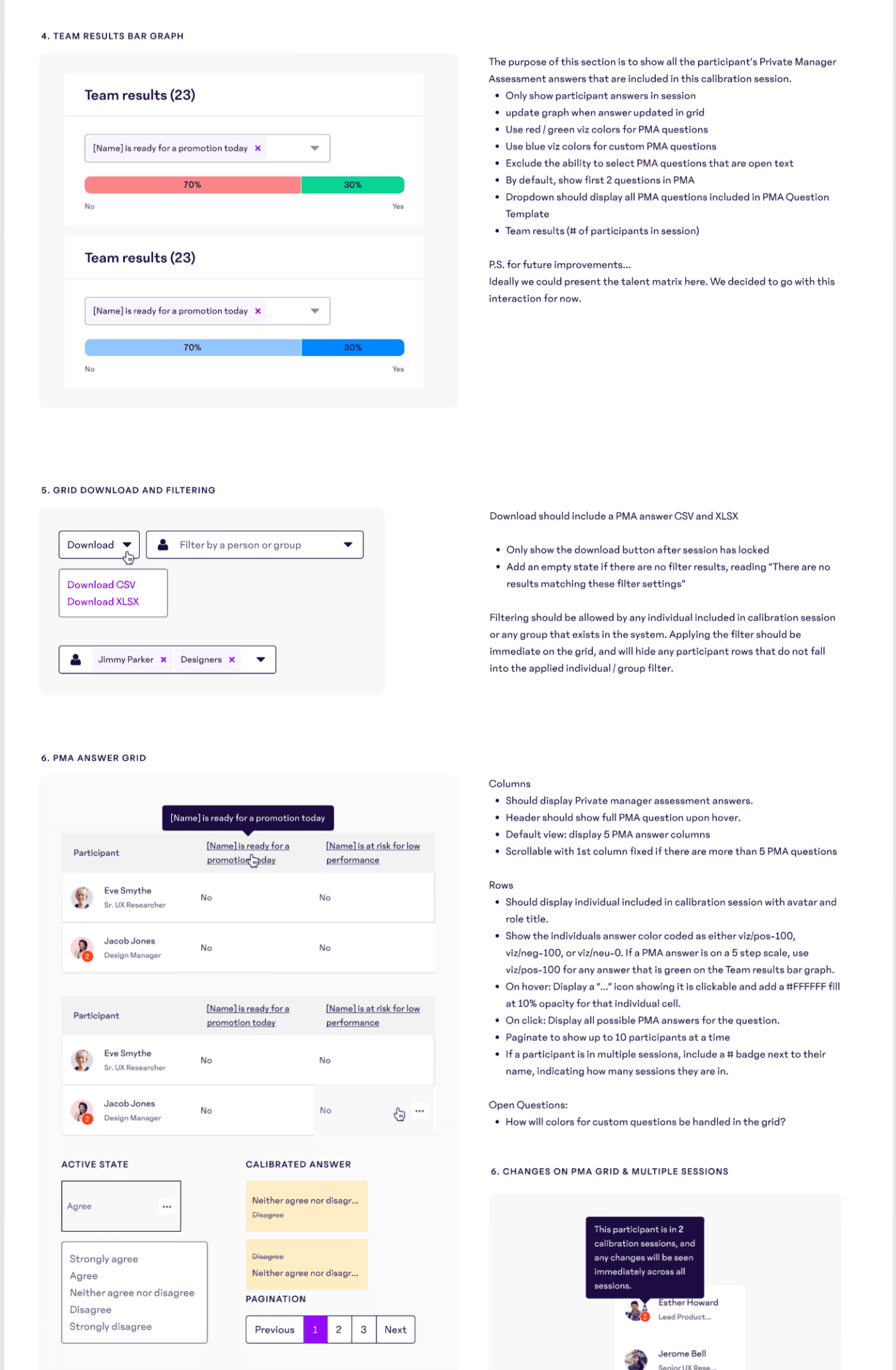

Managers and HR leaders needed a way to understand team insights in real time as changes were made to the manager assessment grid below. The solution uses 2 fields that managers can edit to view a distribution on specific insights. I iterated with displaying all questions, creating an accordion interaction, and showing a Talent Matrix, but ultimately landed on an abbreviated bar chart UI that managers could customize.

Participant’s manager assessment populate the grid. Contributors in a session can update any answer in the grid. Participants can be in multiple sessions. Admins can filter by participant or user group. Calibrated answers needed to be legible and stand out with a subtle highlight.

Finally, all changes to the assessment grid would be documented in the activity feed, which contributors would be able to comment on to capture async dialogue and decisions. The ability to comment directly on the feed was scoped out for MVP. Users could only reply to an activity.

Calibration session page

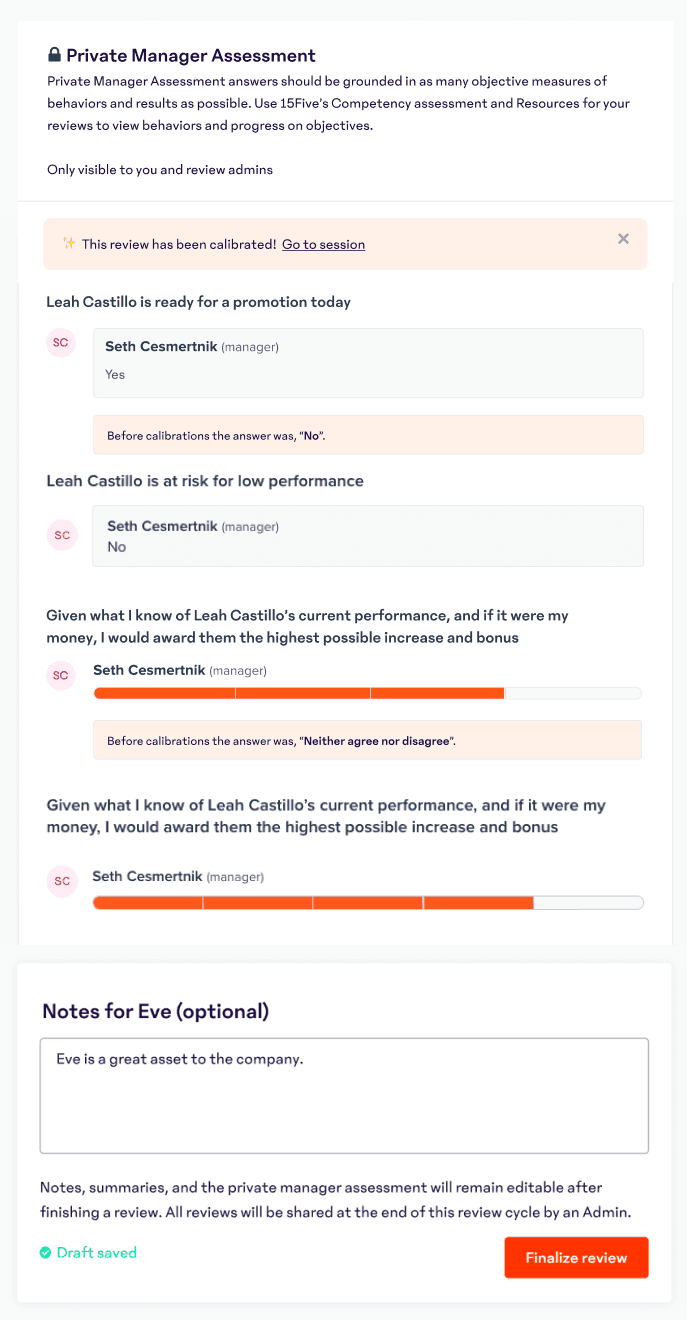

Wrapping up a session

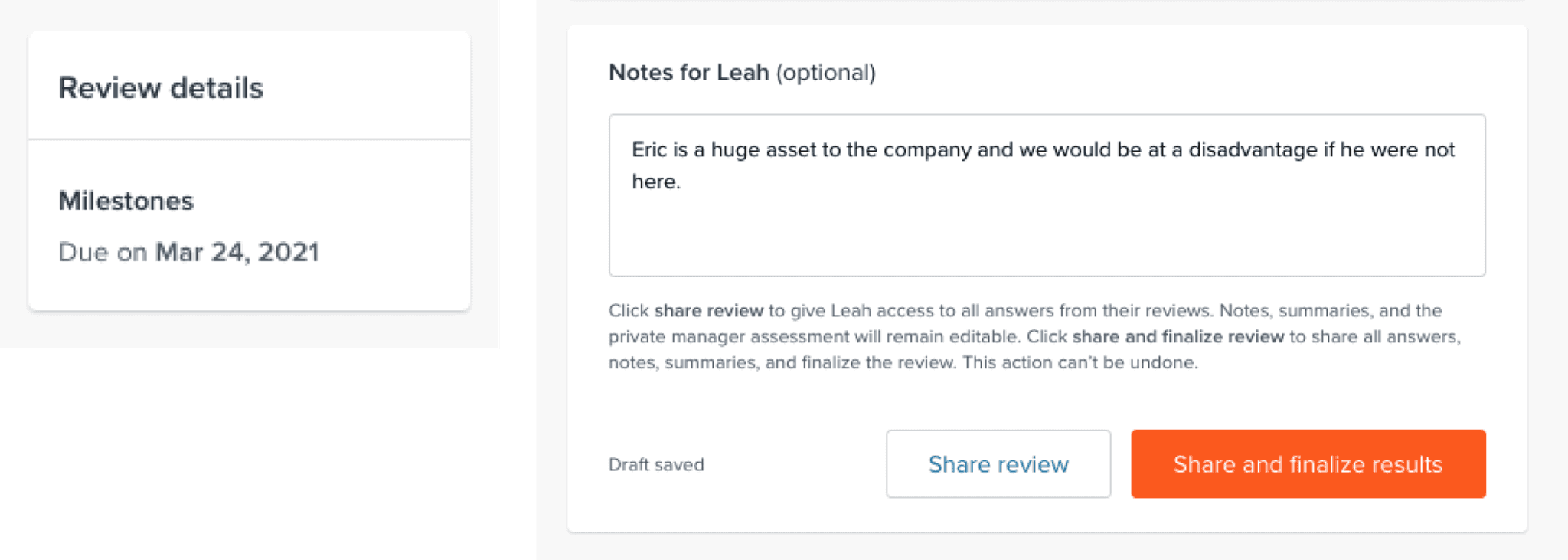

HR Admins had the ability to lock session to prevent further updates. Calibration results could be shared and downloaded after this. Managers would be able to see the calibration status and changed answers in the summary before finalizing any updates to the manager review. The calibration results could help a manager navigate giving feedback during performance review conversations with their reports.

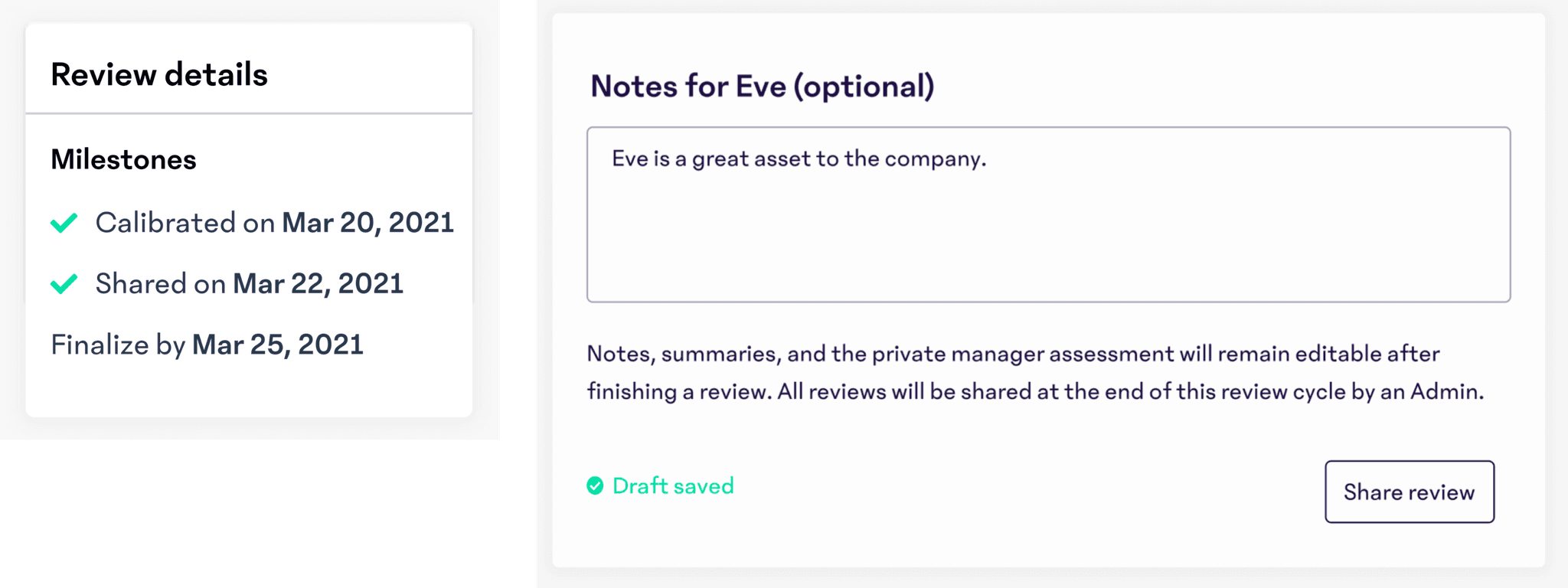

Sharing a review with reports

Additional context

Interaction design iterations

How might we design a simple and intuitive assessment grid that is also feasible for our eng team to quickly build? I iterated through different interactions to explore what felt easiest for users and feasible to build. I experimented with a few concepts…

displaying a column for the original and calibrated mark

an edit and save button interaction

form fields as opposed to on-hover icons

color coding, which ultimately felt too loaded

Iterations of the assessment grid design

Partnering with engineering

I designed a design component guide to document interactions for front-end engineers to reference while building. This artifact ensured specs, user-flows, and edge-cases were clearly detailed during development, and informed creating reusable React components for our design system.

UX debt cleanup

One of the largest product improvement requests from Customer Success was to “unfinalize” reviews because of customer confusion around 2 buttons. Users often accidentally shared reviews by clicking on the wrong button. The share & finalize steps were decoupled and granularity was added to these steps. Admins were given the ability to customize those individual due dates. I advocated for us to fix this UX and tech debt before releasing the calibrations MVP because of the risk of potential confusion.

Before

After

The results

Launched and collected positive feedback

We launched an MVP of our Calibration tool and sought out feedback with customers in a pilot. We heard positive feedback:

“We could... throw the calibration into our review process to be able to put a dashboard together for our leadership team, so I would be super excited to have that feature.” ~ Liz, HR Director

“definitely love this, because our CEO and our CFO have said they absolutely need to have this information in some way, shape or form…” ~ Kira, Talent Development

Reviews Adoption

We’ve seen a 5.5% increase in the percentage of customers running a BSR Cycle quarter-over-quarter.

Stronger product market fit

51% of Admins would be “Very disappointed” if they could no longer use 15Five’s Review tool but only 20% of all user types said they would be “Very disappointed”. The company raised a series C with Performance at the center of their product offering.

Reflections

1. During the discovery phase, I would better understand how HR leaders organize calibration sessions and if it changes based on Enterprise vs. SMB customers

2. We had to scope out a Resources panel refactor which would give easy access to objectives, check-ins and past reviews to write a participant’s review. I would revisit to make improvements here.

3. Joining the team I was not involved early on enough to understand the “why” behind prioritizing building a Calibrations tool. I would have been more vocal to understand if this was the highest impact feature.

Thanks for reading

© 2024 James Park. All Rights Reserved.

Contact